We know that search has evolved beyond the simple text to answer retrieval method – we can search through traditional means or newer ones like voice and now even our smartphone cameras.

As part of Search On, Aparna Chennapragada took us through three core areas that looked at how they’ve been enhancing existing Google features and how we can expect to see them develop further over the coming months.

1. Learning.

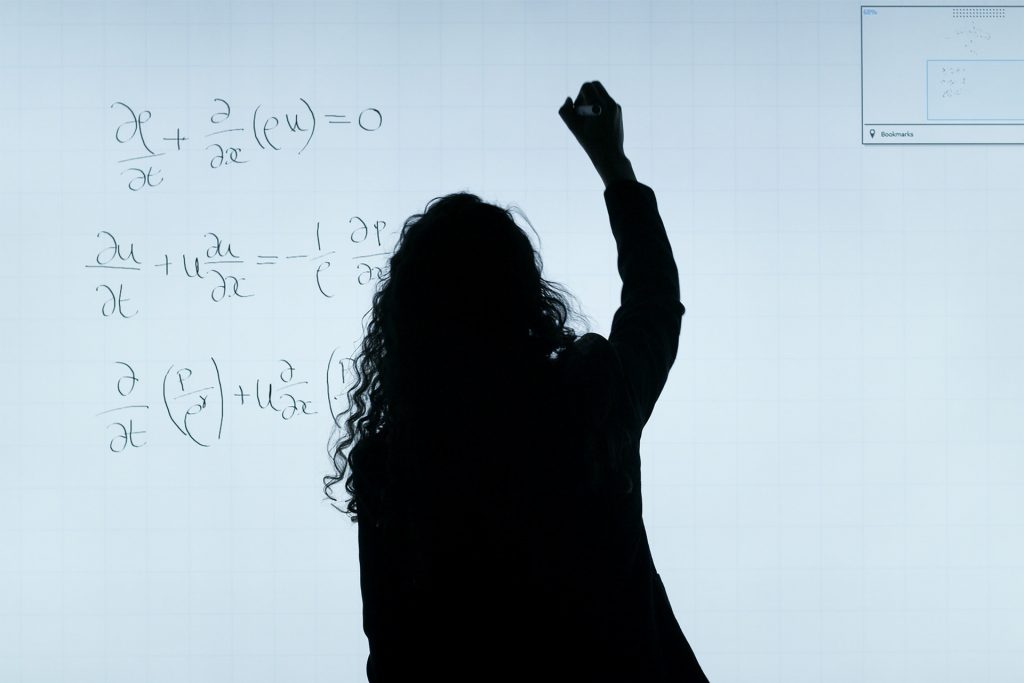

Outside of more generic queries, you can now use various search methods for knowledge acquisition; especially for hard to search things like equations, or foreign languages. Google has been working on developing AI to help them understand the question, the problem, and then provide you with the tools and resources you need to answer your query.

Through developments to Google Lens, you can now take a photo of what you need and Google can interpret that to provide you with the answers. So, if you’re in a foreign country and don’t understand the language on a menu or a sign, you can take a picture of it and Google Lens now has the functionality to translate and read it out loud.

With a huge amount of home learning at the moment, Google Lens has also been developed to understand homework questions like equations, translate them into queries, and then provide the answer and step by step guides and videos on how to solve the equation. The latest development of this is one step further, incorporating AR to help searchers understand and explore, helping to bring learning to life and offer a much more in-depth search experience.

The final part of their Learning enhancements comes from the DataCommons database. While this has been available for a couple of years, they are now exposing DataCommons as a new layer of the Knowledge Graph. With this, you can ask Google questions and say “tell me the crime level in Chicago” and then Google aggregates a huge amount of data to present information back to the searchers in easily digestible, visual formats to help answer the query as effectively and succinctly as possible.

2. Shopping.

Improvements to shopping have been well documented throughout 2020, but as part of these the release of free listings will be fundamental for many merchants throughout the rest of the year. We recently wrote a blog post on the free Google shopping listings coming to the UK and what that means, along with what you need to do to have them show.

However, there are also changes to how people can shop. Going back to Google Lens, from next month you’ll be able to long press on images on Google to search the image itself. With that, the way we shop is poised to change forever; their ‘style engine’ technology will allow you to search the image for the exact or similar items as well as suggesting ways to style it. Think the Clueless wardrobe at a monumental scale, where you’ve taken the largest products database in the world, combined it with AI, and produced a style playbook based on one of (if not the) largest image databases in the world. Everything you could ever need to shop and find your style, right at your fingertips. Say goodbye to having to go to the shop and find your style, say hello to long-press searches and adding to your basket.

Finally, they’ve been investing heavily in AR to bring the shopping experience closer to the searchers. People aren’t going into shops any more, so one of the first things Google is looking at is the automotive industry – a high-consideration, high-value purchase item. If you search for a new car on Google, and are connected to a high speed network, you will now be able to bring the car to you through their cloud streaming technology. That means, you can put it on your drive, or on the beach, or at the top of a mountain, change the colours and materials to build your ideal car, and check out all of the intricate features as if you would in real life. This is a huge step to improving the high-consideration purchase experience.

3. Understanding the World Around You.

Google Maps has seen some big improvements over the last couple of years, and last year Live View was introduced to utilise AR for heads-up direction displays. Over the next few months, this is being enhanced further to bring live business information into these displays as well.

That means, as well as directions, AR will now be used in Live View to show you things like opening times for restaurants, when they get busy, star ratings and more, by just pointing your camera at the building. This level of information is only going to enhance the popularity of Google Maps, with more and more features being built in it is going to become imperative for local businesses to have a solid presence on Google.

Check out the rest of our Search On roundup’s here:

- Delivering a Higher Standard of Google Search (talk 1)

- 3 New Advancements in Search (talk 3)

- Or check out the full event recording here