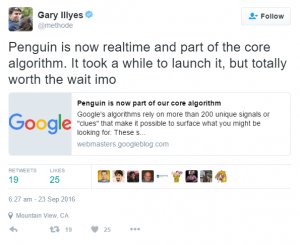

(September 23d 2016) The long awaited Penguin 4.0 update has finally been released and integrated into the core algorithm according to an official post released on Google’s webmaster central blog today.

What is Penguin? As of today, it’s the portion of Google’s core algorithm which identifies backlink compliance, using this information to help determine overall rankings.

After countless false alarms and forum chatter bordering on conspiracy theory, it seems remarkable to finally have official confirmation of the final release of Penguin 4.0. The amount of time and false starts in the story of this final iteration of the Penguin algorithm has left many webmasters and SEO professionals exasperated.

The update is different from previous penguin updates in two important respects.

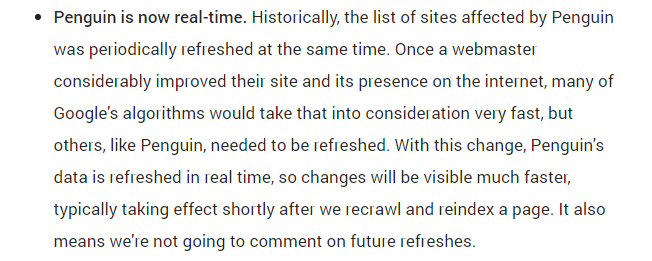

1. It’s “real-time”.

Whether it is actually as fast as true real-time is debatable, but it looks as though we can expect the Penguin algorithm to recalculate its effect on rankings relatively quickly – perhaps within a few days or less. The only way to know how fast it really is will be to wait and see.

The major implication of this is that websites losing visibility from Penguin may be able to reclaim some of the lost visibility in a matter of days by making their referring domains compliant with Google’s Webmaster Guidelines. This is a stark contrast to the situation which has existed in the period since the last penguin update, which has seen webmasters hit by Penguin waiting in the sin bin and missing out on organic revenue, waiting for this update in the hope that their efforts to bring their backlink profile up to code has been worthwhile. In the coming days they will find out.

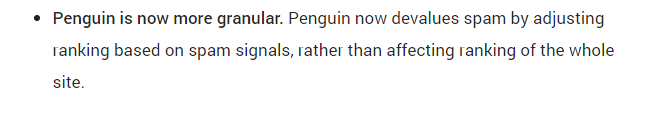

2. It’s “more granular”.

Unlike the point above, this one is less clear. What it seems to be saying is that Penguin previously worked on a domain basis. It doesn’t specifically say this is no longer the case, or what the new basis is, short of basing the recalculated rankings on “spam signals”. Might this mean that Penguin will now be working on more of a page-by-page basis? Unfortunately, we cannot confirm this until either Google clarifies their wording or we begin to see how this change is really affecting webpage rankings in the wild.

Update: A Google spokesperson has since expanded slightly on this, stating that the algorithm being more granular does not mean that it only affects pages – it means that it affects finer granularity than sites as a whole. However, this adds little clarification and does not rule out that Penguin is being applied at a page level as-well as at the domain level.

Penguin 4.0 in context

Google sets the Penguin 4.0 algorithm in context at the end of its post, reminding webmasters that it is now just one of over 200 signals used to determine rankings. While it is indeed important to remember this, it’s likely that Penguin accounts for considerably more than 1/200th of the equation. The post rounds off by reiterating that webmasters should feel free to focus on creating amazing, compelling websites – sound advice no matter what algorithmic animal is taking headlines.

5 steps to managing Penguin 4.0 in real-time

1. Monitor your organic traffic and rankings on both a domain level and a page level over the next few weeks to check for significant changes. If it’s true that a granular approach means the Penguin algorithm is acting on a per page basis, there could be value in page level analysis of organic traffic and backlinks as well as at the domain level.

2. If there’s been a change in organic traffic and / or rankings without another clear cause, analyse your backlink profile in full:

- When was the last time you reviewed the referring domains and backlinks pointing to your site? If it’s been a while and your website regularly receives backlinks, we recommend making a thorough check. Negative SEO, the practice of building artificial low-quality links to a competitor to attempt to lower their rankings, has seen a resurgence in recent months.

- Google Search Console should be your first port of call when checking backlinks as these are officially reported on by Google. Fortunately, it’s free. However, any good backlink analysis should be complemented by a thorough third party tool such as Majestic or Ahrefs – preferably both.

3. Update your disavow file if necessary based on the backlink analysis.

- If your backlink review revealed suspicious or risky links which may be in breach of Google’s Webmaster Guidelines, you may want to consider creating / extending a disavow file.

- Ensure your file is correctly formatted (guidelines here)

4. Stay calm. While we haven’t yet seen the new algorithm in action, the spectre of a potential long-term algorithmic penguin hit seems as little less likely due to the purported “real-time” element.

5. Get an expert to look over your link profile.

- Making decisions on disavowing or keeping links can be a challenge. Many webmasters are over-zealous and end up disavowing links which were contributing to their rankings, resulting in a loss of organic traffic. Webmasters and business owners often find that an expert opinion provides peace of mind.